The COVID-19 pandemic has forced universities to move the completion of university studies online. Spain’s National Conference of Medical School Deans coordinates an objective, structured clinical competency assessment called the Objective Structured Clinical Examination (OSCE), which consists of 20 face-to-face test sections for students in their sixth year of study. As a result of the pandemic, a computer-based case simulation OSCE (CCS-OSCE) has been designed. The objective of this article is to describe the creation, administration, and development of the test.

Materials and methodsThis work is a descriptive study of the CCS-OSCE from its planning stages in April 2020 to its administration in June 2020.

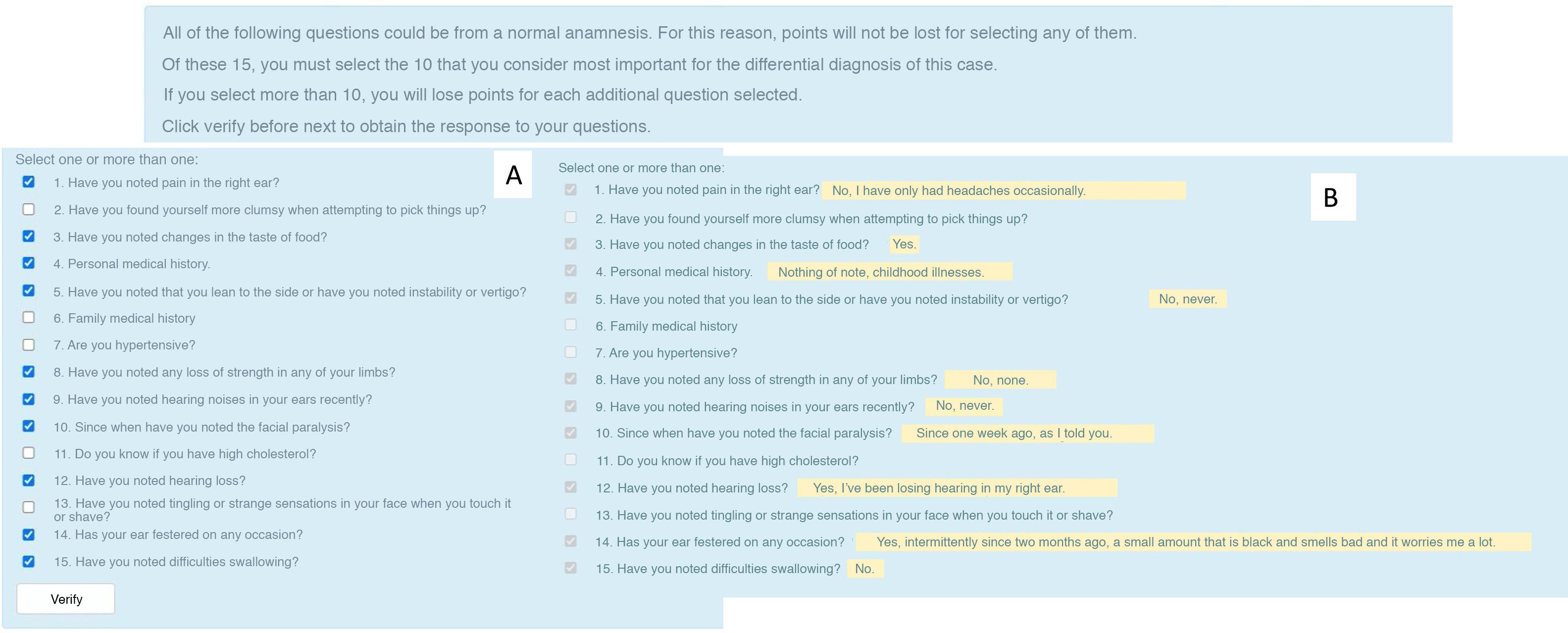

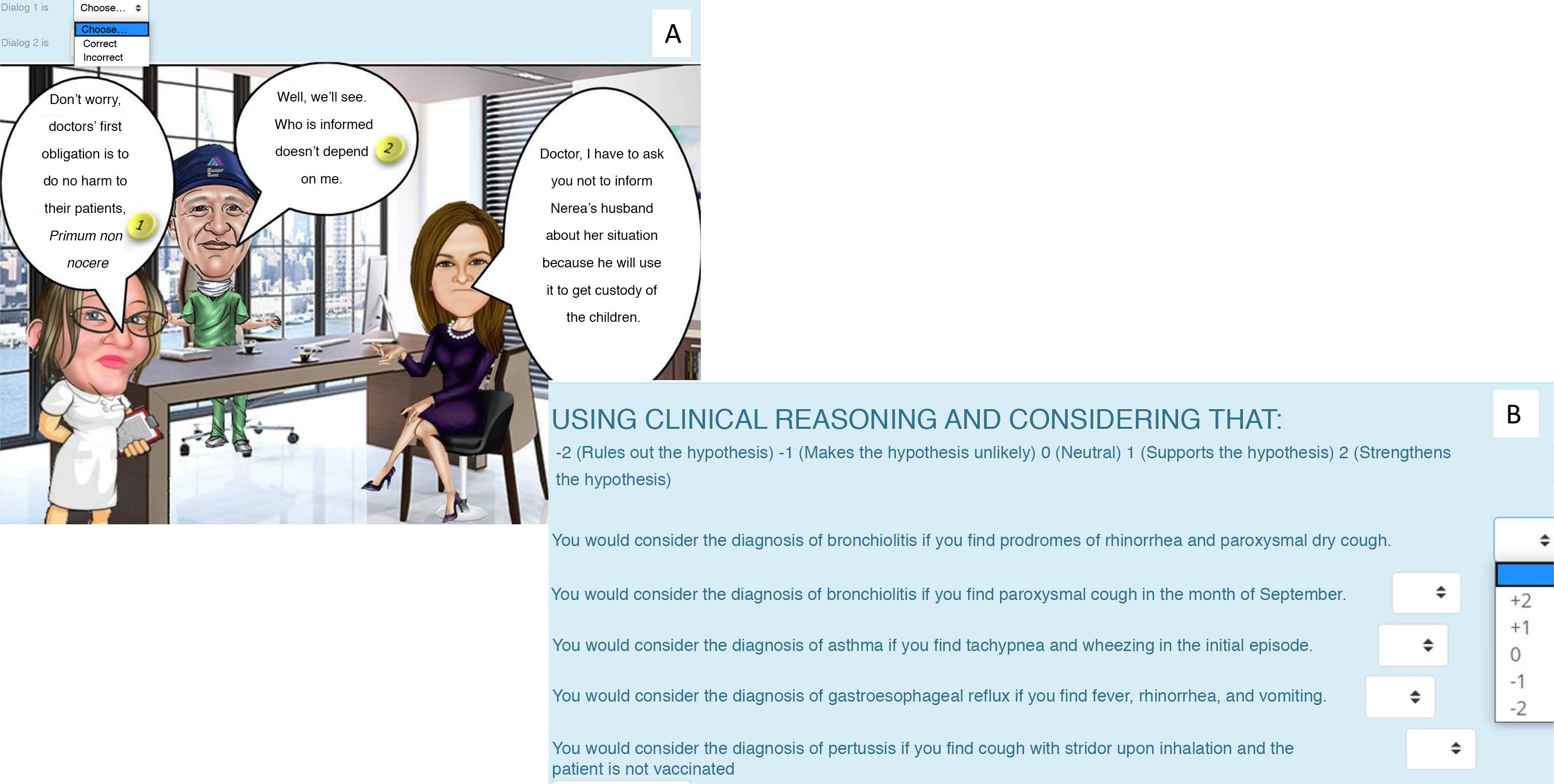

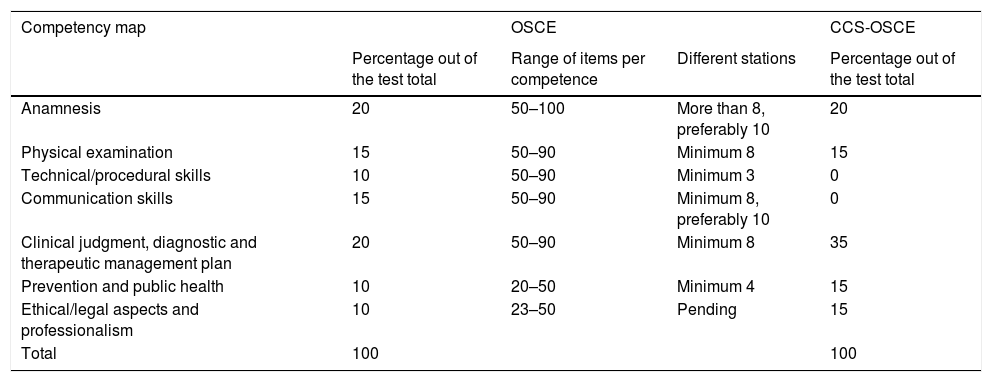

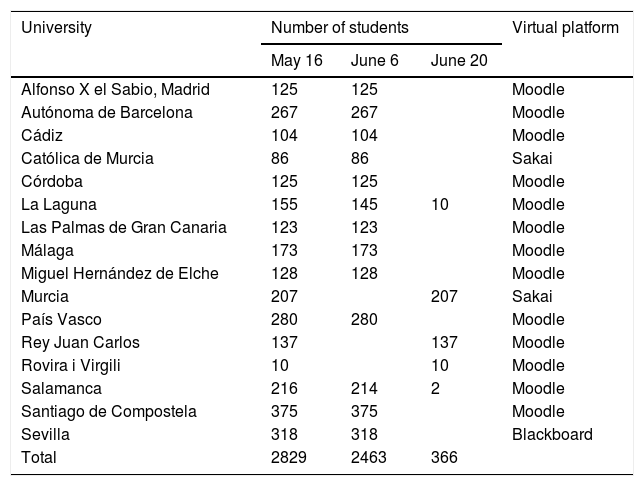

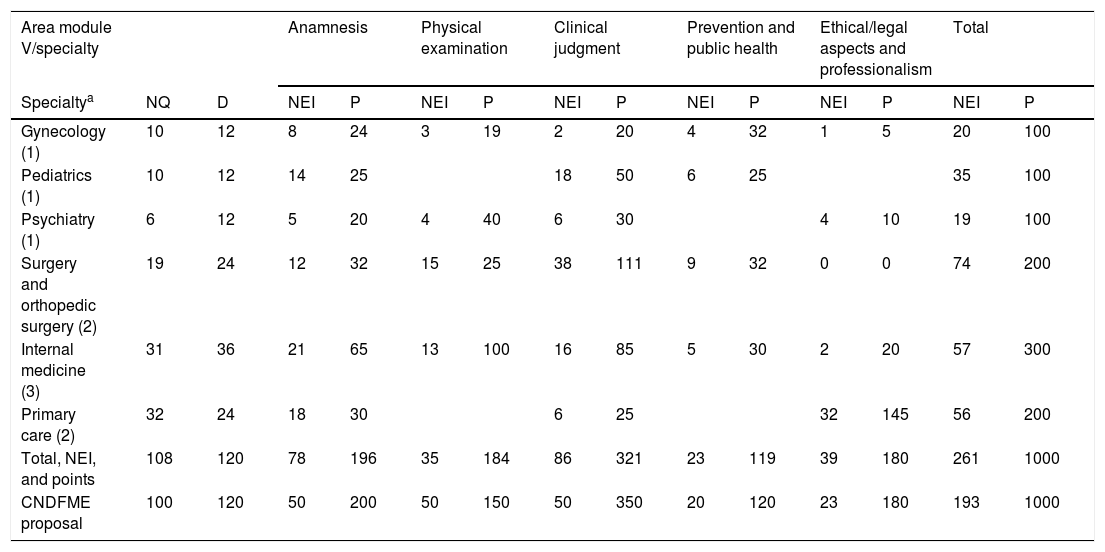

ResultsThe CCS-OSCE evaluated the competences of anamnesis, exploration, clinical judgment, ethical aspects, interprofessional relations, prevention, and health promotion. No technical or communication skills were evaluated. The CCS-OSCE consisted of ten test sections, each of which had a 12-min time limit and ranged from six to 21 questions (mean: 1.1 min/question). The CCS-OSCE used the virtual campus platform of each of the 16 participating medical schools, which had a total of 2829 students in their sixth year of study. It was jointly held on two dates in June 2020.

ConclusionsThe CCS-OSCE made it possible to bring together the various medical schools and carry out interdisciplinary work. The CCS-OSCE conducted may be similar to Step 3 of the United States Medical Licensing Examination.

La pandemia de la COVID-19 ha obligado a completar los estudios universitarios online. La Conferencia Nacional de Decanos de Facultades de Medicina coordina una prueba de evaluación de competencias clínicas objetiva y estructurada (ECOE) de 20 estaciones presenciales a los estudiantes de sexto del grado. Como consecuencia de la pandemia se ha diseñado una ECOE sustitutoria con casos-clínicos computarizados simulados (ECOE-CCS). El objetivo del artículo es describir la elaboración, la ejecución y el desarrollo de la prueba.

Materiales y métodosEstudio descriptivo de la ECOE-CCS conjunta desde su gestación en abril 2020 hasta su ejecución en junio 2020.

ResultadosLa ECOE-CCS evaluó las competencias de anamnesis, exploración, juicio clínico, aspectos éticos, relaciones interprofesionales, prevención y promoción de la salud. No se evaluaron habilidades técnicas ni de comunicación. La ECOE-CCS consistió en 10 estaciones de 12 minutos de duración, con un número de preguntas de seis a 21 (media: 1,1 minutos/pregunta). En la ECOE-CCS se utilizó la plataforma virtual del campus de cada una de las 16 facultades de Medicina que participaron, con un total de 2.829 estudiantes de sexto curso. Se realizó de una forma conjunta en dos fechas de junio del 2020.

ConclusionesLa experiencia de la ECOE-CCS permitió llevar a cabo una integración y el trabajo interdisciplinar de las diferentes facultades de Medicina. La ECOE-CCS realizada podría asemejarse al Step 3 CCS de la United States Medical Licensing Examination.

Article

Diríjase desde aquí a la web de la >>>FESEMI<<< e inicie sesión mediante el formulario que se encuentra en la barra superior, pulsando sobre el candado.

Una vez autentificado, en la misma web de FESEMI, en el menú superior, elija la opción deseada.

>>>FESEMI<<<